🔍 The Hidden Enemy: Tackling “Too Many Open Files” Like a Pro

In the world of software engineering, not all bugs scream at you with stack traces and core dumps. Some creep up silently, throttle your systems, and bring production to its knees — all while wearing the innocent mask of a file descriptor.

Over the past decade, I've encountered the dreaded too many open files error in Node.js, Java, Python, and even Flutter desktop apps. Different languages. Different infrastructures. Same haunting problem.

In this post, I’ll walk you through:

- 🔄 A repeatable playbook to detect, recover, and fix this issue

- 📊 Real-world examples of open file descriptor explosions

- 🛠️ Tools, scripts, and design changes to prevent it from happening again

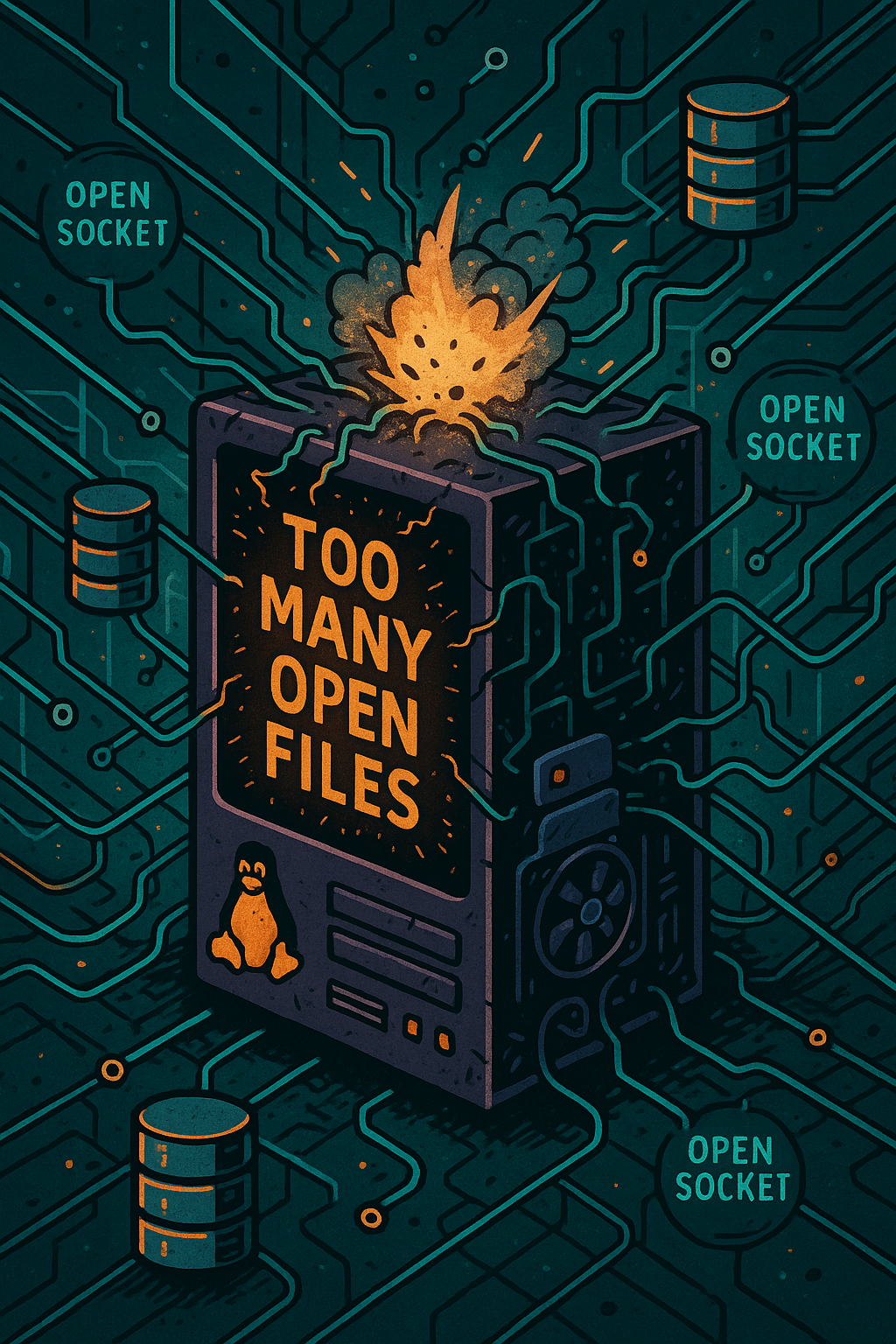

☠️ What Does “Too Many Open Files” Actually Mean?

Every process on a Unix-based system (Linux/macOS) can only open a limited number of file descriptors — typically 1024 by default.

These include:

- Open files (duh)

- Open sockets (DB connections, HTTP requests)

- Pipes, devices, logs, etc.

If your app opens files or sockets and doesn't close them, over time it hits this limit and fails spectacularly.

🧨 Symptoms:New users can't connectLogs stop writingDB connections hangYou get:EMFILE: too many open filesorToo many open files in system

🧰 The Playbook: Diagnosing and Solving “Too Many Open Files”

📌 Step 1: Capture the Scene

Run lsof on the instance where the issue is happening:

bashCopyEditlsof -p <pid> | wc -l

Or view directly:

ls -l /proc/<pid>/fd/

💡 Automation Tip: Set up a cronjob or systemd timer to dump lsof every minute for affected services:

*/1 * * * * lsof -p $(pidof myservice) > /var/log/lsof-dump-$(date +\%s).log

🚑 Step 2: Buy Time – Scale the System

To protect the business, scale vertically or horizontally:

- Spin up another instance (horizontal)

- Temporarily raise file descriptor limit:

ulimit -n 65535 - Adjust

/etc/security/limits.confand/etc/systemd/system/*.servicefor persistent ulimit increase.

🔬 Step 3: Investigate the LSOF Dumps

Analyze the lsof output:

lsof -p <pid> | awk '{print $5}' | sort | uniq -c | sort -nr

Common culprits:

- Leaking DB connections (especially in ORMs)

- Unclosed file handles (logs, uploads)

- Websocket or long-polling endpoints

🧠 Real-world catch:

In one case, a missing .close() in a file streaming API caused 80K open file handles over a weekend.🛠️ Step 4: Apply the Fix (Corrective Action)

Once you've found the offender:

- Add

finally { resource.close(); } - Switch to connection pooling

- Use file streams responsibly (avoid reading entire files into memory)

- Upgrade broken libraries

✅ Deploy the fix and monitor with:

watch -n 5 "lsof -p <pid> | wc -l"

🔁 Step 5: Design It Right Next Time (Preventive Action)

Make your architecture resilient:

🔒 Use Safe Patterns

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

public class SafeFileReader {

public static void main(String[] args) {

try (BufferedReader reader = new BufferedReader(new FileReader("data.txt"))) {

String line;

while ((line = reader.readLine()) != null) {

System.out.println(line);

}

} catch (IOException ex) {

ex.printStackTrace();

}

}

}🔍 try-with-resources automatically closes the BufferedReader even if an exception occurs — no need for a finally block.

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

public class SafeDBExample {

public static void main(String[] args) {

String url = "jdbc:mysql://localhost:3306/mydb";

String query = "UPDATE users SET last_login = NOW() WHERE id = ?";

try (

Connection conn = DriverManager.getConnection(url, "user", "password");

PreparedStatement stmt = conn.prepareStatement(query)

) {

stmt.setInt(1, 42);

stmt.executeUpdate();

} catch (SQLException e) {

e.printStackTrace();

}

}

}💡 Both Connection and PreparedStatement are closed automatically, preventing connection leaks in busy environments.

🧪 Add Health Checks

- Monitor FD count via Prometheus node exporter (

node_filefd_allocated) - Trigger alerts if it exceeds a threshold

🏗️ Architectural Ideas

- Migrate to serverless for short-lived processes

- Use reverse proxies that handle retries and timeouts

- Isolate long-lived connections to their own workers

Conclusion

The too many open files error isn't just a technical issue — it's a design smell. Systems that silently leak resources are ticking time bombs.

Every time I've encountered this in my career — across startups, scale-ups, and monoliths — the solution followed the same 5-step cycle:

Detect → Isolate → Stabilize → Fix → Redesign

And now, with this playbook, I never fear it again.

✨ TL;DR

✅ Monitor FD usage proactively

✅ Automate lsof dumps

✅ Scale safely under pressure

✅ Design for cleanup

✅ Treat leaks as architecture bugs, not just coding bugs

📬 Got war stories about ulimit pain?